MSI Accelerates AI and Data Center Innovation with Next-Gen Server Solutions at SC25

ST. LOUIS, Missouri – November 18, 2025 – MSI, a leading global provider of high-performance server solutions, showcases its next-generation computing and AI innovations at SuperComputing 2025 (SC25), Booth #205. MSI introduces its ORv3 rack solution and a comprehensive portfolio of power-efficient, multi-node, and additional AI-optimized platforms built on NVIDIA MGX and desktop NVIDIA DGX, designed for high-density environments and mission-critical workloads. These modular, scalable and rack-scale solutions are engineered for maximum performance, energy efficiency, and flexibility, enabling modern and next-generation data centers to accelerate deployment and scale with ease.

"Through close collaboration with industry leaders AMD, Intel, and NVIDIA, MSI continues to drive innovation across the data center ecosystem," said Danny Hsu, General Manager of the Enterprise Platform Solutions at MSI. "Our goal is to deliver scalable, energy-efficient infrastructure that empowers customers to accelerate AI development and next-generation computing with performance, reliability, and flexibility at scale."

Scaling Data Center Performance — From DC-MHS Architecture to Rack Solutions

MSI’s data center building blocks are developed on the DC-MHS (Datacenter Modular Hardware System) architecture, spanning host processor modules, Core Compute servers, Open Compute servers, and AI computing servers. This modular design standardizes hardware components, BMC architecture, and form factors, simplifying operations and reducing deployment complexity. With EVAC CPU heatsink support, data centers can maintain thermal efficiency while rapidly adapting to the growing demands of AI, analytics, and compute-intensive workloads. MSI’s modular approach empowers operators to deploy next-generation infrastructure faster and achieve time-to-market value.

ORv3 Rack — Designed for Next-Generation Data Centers

MSI’s ORv3 21” 44OU rack is a fully validated, integrated solution that combines power, thermal, and networking systems to streamline engineering and accelerate deployment in hyperscale environments. Featuring sixteen CD281-S4051-X2 2OU DC-MHS servers, the rack utilizes centralized 48V power shelves and front-facing I/O, maximizing space for CPUs, memory, and storage while maintaining optimal airflow and simplifying maintenance.

Single-Socket AMD EPYC™ 9005 Server in ORv3 Architecture:

CD281-S4051-X2: 2OU 2-node server with 12 DDR5 DIMM slots and 12 E3.S 1T PCIe 5.0 x4 NVMe bays per node

DC-MHS Core Compute Servers — High-Density, Scalable Data Center Solutions

MSI’s Core Compute platforms maximize rack density and resource efficiency by integrating multiple compute nodes into a single high-density chassis. Each node is powered by either AMD EPYC 9005 Series processors (up to 500W TDP) or Intel® Xeon® 6 processors (up to 500W/350W TDP). Available in 2U 4-node and 2U 2-node configurations, these platforms deliver exceptional thermal performance and scalability for today’s data centers.

Single-Socket AMD EPYC 9005 Servers

CD270-S4051-X4: 2U 4-node server with 12 DDR5 DIMM slots and 3 PCIe 5.0 x4 U.2 NVMe bays per node.

CD270-S4051-X2: 2U 2-node server with 12 DDR5 DIMM slots and 6 PCIe 5.0 x4 U.2 NVMe bays per node.

Single-Socket Intel Xeon 6 Servers

CD270-S3061-X4: 2U 4-node server with 16 DDR5 DIMM slots and 3 PCIe 5.0 x4 U.2 NVMe bays per node.

CD270-S3071-X2: 2U 2-node server with 12 DDR5 DIMM slots and 6 PCIe 5.0 x4 U.2 NVMe bays per node.

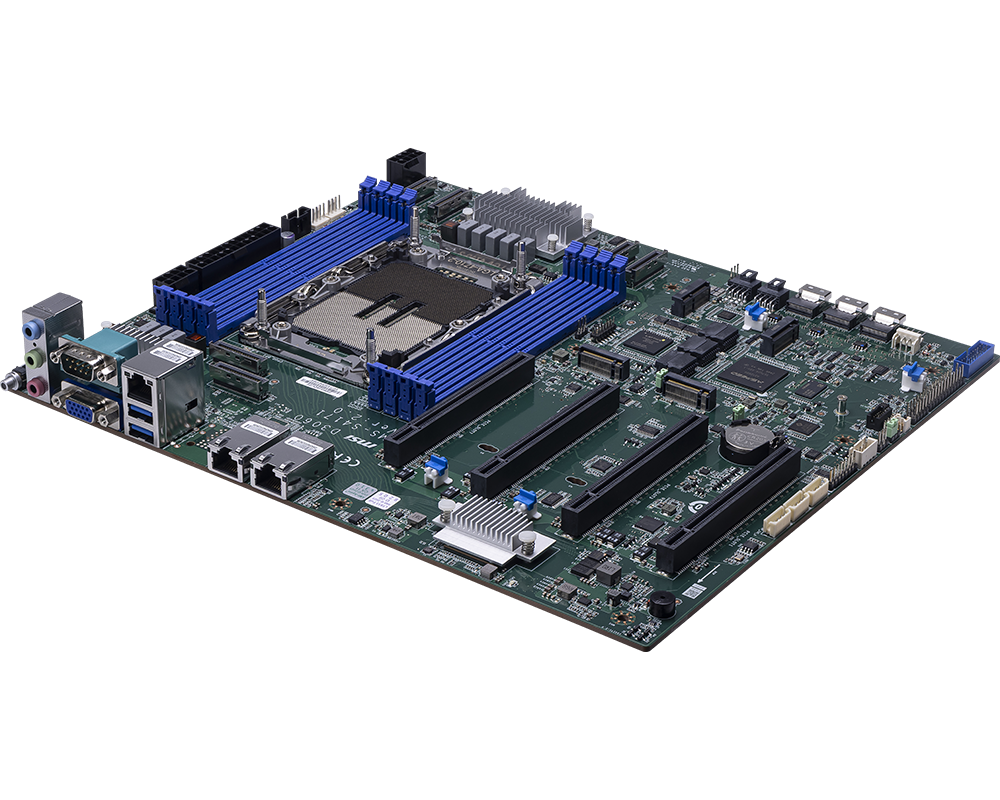

DC-MHS Enterprise Servers — High-Efficiency Platforms for Cloud Workloads

Built on the DC-MHS architecture, MSI’s enterprise server platforms deliver exceptional memory capacity, extensive I/O options, and high TDP CPU compatibility to handle demanding cloud, virtualization, and storage applications. Supporting both AMD EPYC 9005 Series and Intel Xeon 6 processors, these modular solutions provide flexible performance for diverse data center workloads.

Single-Socket AMD EPYC 9005 Servers

CX271-S4056: 2U server with 24 DDR5 DIMM slots and configurations of 8 or 24 PCIe 5.0 U.2 NVMe bays

CX171-S4056: 1U server with 24 DDR5 DIMM slots and 12 PCIe 5.0 U.2 NVMe bays.

Dual-Socket Intel Xeon 6 Servers

CX270-S5062: 2U server with 32 DDR5 DIMM slots and configurations of 8 or 24 PCIe 5.0 U.2 NVMe bays.

CX170-S5062: 1U server with 32 DDR5 DIMM slots and 12 PCIe 5.0 U.2 NVMe bays.

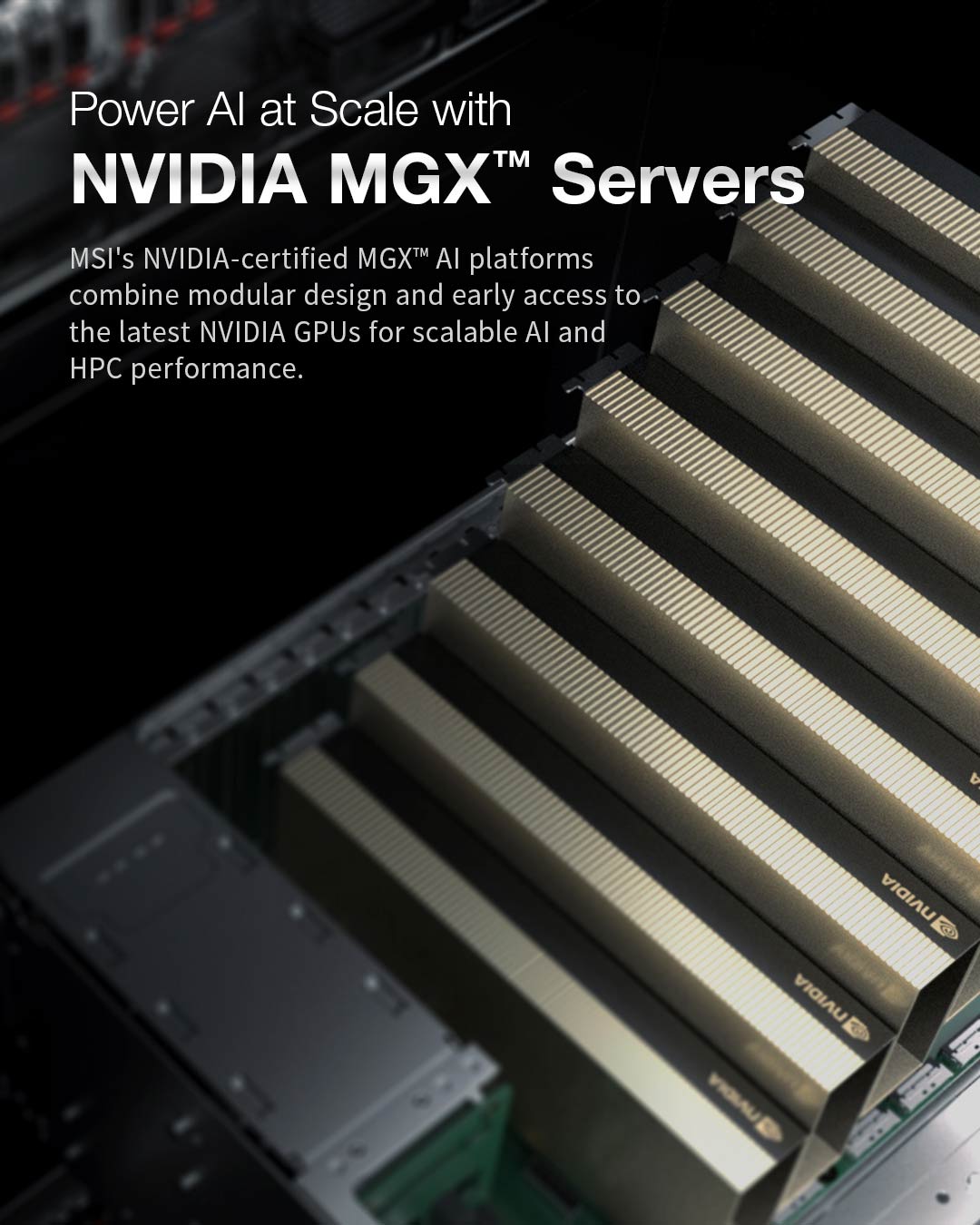

Next-Generation AI Solutions Accelerated by NVIDIA

MSI introduces a new era of AI computing solutions, built on the NVIDIA MGX and NVIDIA DGX Station reference architectures. The lineup includes AI servers and AI station supporting the latest NVIDIA Hopper GPUs, NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs, and NVIDIA Blackwell Ultra GPUs, engineered to meet diverse deployment needs, from large-scale data center training to edge inferencing and AI development on the desktop.

MSI’s AI servers are purpose-built for high-performance computing and AI workloads. The 4U AI platforms offer flexible configurations with both Intel Xeon and AMD EPYC processors, supporting up to 600W GPUs for maximum performance. These platforms are ideal for large language models (LLMs), deep learning training, and NVIDIA Omniverse workloads.

AI Servers

CG481-S6053: Dual AMD EPYC 9005 CPUs, eight PCIe 5.0 x16 FHFL dual-width GPU slots, 24 DDR5 DIMMs, eight 2.5-inch U.2 NVMe bays, and eight 400G Ethernet ports powered by NVIDIA ConnectX-8 SuperNICs.

CG480-S5063: Dual Intel Xeon 6 CPUs, eight PCIe 5.0 x16 FHFL dual-width GPU slots, 32 DDR5 DIMMs, and twenty PCIe 5.0 E1.S NVMe bays.

CG290-S3063: 2U AI server powered by a single Intel Xeon 6 CPU with 16 DDR5 DIMMs and four FHFL dual-width GPU slots (up to 600W each), ideal for edge computing and small-scale inference deployments.

AI Station

For developers demanding data center-level performance in a workstation form factor, the MSI AI Station CT60-S8060 brings the power of the NVIDIA DGX Station to the desktop. Built with the NVIDIA GB300 Grace Blackwell Ultra Desktop Superchip and up to 784GB of unified memory, it delivers unprecedented compute performance for developing, training, and deploying large-scale AI models all from the deskside.

Supporting Resources:

Discover how MSI’s OCP ORv3-compatible nodes deliver optimized performance for hyperscale cloud deployments.

Watch the MSI’s 4U & 2U NVIDIA MGX AI platform, built on NVIDIA accelerated computing to deliver the performance for tomorrow’s AI workloads.